http://www.gamasutra.com/features/20000406/lander_01.htm

"

Early work focussed on the animation of geometrical facial

models, which can be performed using predefined

expression and mouth shape components [1][2][3]. The

focus then shifted to physics-based anatomical face models,

with animation performed using numerical simulation of

muscle actions [4][5]. More recently an alternative

approach has emerged which takes photographs of a real

individual enunciating the different phonemes and

concatenates or interpolates between them [6][7][8].

Incorrect or absent visual speech cues are an

impediment to the correct interpretation of speech for both

hearing and lip-reading viewers [10].

The resultant viseme images can then be integrated

with a text-to-speech engine and animated to produce realtime

photo realistic visual speech.

The simplest approach to producing animated speech

using visemes is to simply switch to the image that

corresponds to the current phoneme being spoken. Several

existing speech engines support concatenated viseme visual

speech by reporting either the current phoneme or a

suggested viseme. For example, the Microsoft Speech API

(SAPI) will report which of the 21 Disney visemes is

currently being spoken. We use a table to convert between

our extended Ezzat and Poggio set and the Disney set. For

slightly smoother visual speech we detect changes in

viseme and form a 50/50 blend of the previous and current

viseme.

other head movements - blinking,

nods and expression changes, required for realism."

Many animations are based on the Disney visemes:

http://www.gamasutra.com/features/20000406/lander_01.htm

Disney Visemes:

Phonemes and Visemes: No discussion of facial animation is possible without discussing phonemes. Jake Rodgers’s article “Animating Facial Expressions” (Game Developer, November 1998) defined a phoneme as an abstract unit of the phonetic system of a language that corresponds to a set of similar speech sounds. More simply, phonemes are the individual sounds that make up speech. A naive facial animation system may attempt to create a separate facial position for each phoneme. However, in English (at least where I speak it) there are about 35 phonemes. Other regional dialects may add more.

Now, that’s a lot of facial positions to create and keep organized. Luckily, the Disney animators realized a long time ago that using all phonemes was overkill. When creating animation, an artist is not concerned with individual sounds, just how the mouth looks while making them. Fewer facial positions are necessary to visually represent speech since several sounds can be made with the same mouth position. These visual references to groups of phonemes are called visemes. How do I know which phonemes to combine into one viseme? Disney animators relied on a chart of 12 archetypal mouth positions to represent speech as you can see in Figure 1.

Each mouth position or viseme represented one or more phonemes. I have seen many facial animation guidelines with different numbers of visemes and different organizations of phonemes. They all seem to be similar to the Disney 12, but also seem like they involved animators talking to a mirror and doing some guessing.

Along with the animator’s eye for mouth positions, there are the more scientific models that reduce sounds into visual components. For the deaf community, which does not hear phonemes, spoken language recognition relies entirely on lip reading. Lip-reading samples base speech recognition on 18 speech postures. Some of these mouth postures show very subtle differences that a hearing individual may not see.

http://www.generation5.org/content/2001/visemes.asp

Visemes: Representing Mouth Positions By James Matthews

SAPI provides the programmer with a very powerful feature - viseme notification. A viseme refers to the mouth position currently being "used" by the speaker. SAPI 5 uses the Disney 13 visemes:

typedef enum SPVISEMES{ // English examples //------------------ SP_VISEME_0 = 0, // silence SP_VISEME_1, // ae, ax, ah SP_VISEME_2, // aa SP_VISEME_3, // ao SP_VISEME_4, // ey, eh, uh SP_VISEME_5, // er SP_VISEME_6, // y, iy, ih, ix SP_VISEME_7, // w, uw SP_VISEME_8, // ow SP_VISEME_9, // aw SP_VISEME_10, // oy SP_VISEME_11, // ay SP_VISEME_12, // h SP_VISEME_13, // r SP_VISEME_14, // l SP_VISEME_15, // s, z SP_VISEME_16, // sh, ch, jh, zh SP_VISEME_17, // th, dh SP_VISEME_18, // f, v SP_VISEME_19, // d, t, n SP_VISEME_20, // k, g, ng SP_VISEME_21, // p, b, m} SPVISEMES;Everytime a viseme is used, the SAPI5 engine can send your application a notification which it can use draw the mouth position. Microsoft provides an excellent example of this with its SAPI5 SDK, called TTSApp. TTSApp is written using the standard Win32 SDK and has additional features that bog down the code. Therefore, I created my own version using MFC that is hopefully a little easier to understand.

Using visemes is relatively simple, it is the graphical side of it that is the hard part. This is why, for demonstration purposes, I used the microphone character that was used in the TTSApp

http://msdn.microsoft.com/en-us/library/ms720881(VS.85).aspx

The SpeechVisemeType enumeration lists the visemes supported by the SpVoice object. This list is based on the original Disney visemes.

Definition

Enum SpeechVisemeType SVP_0 = 0 'silence SVP_1 = 1 'ae ax ah SVP_2 = 2 'aa SVP_3 = 3 'ao SVP_4 = 4 'ey eh uh SVP_5 = 5 'er SVP_6 = 6 'y iy ih ix SVP_7 = 7 'w uw SVP_8 = 8 'ow SVP_9 = 9 'aw SVP_10 = 10 'oy SVP_11 = 11 'ay SVP_12 = 12 'h SVP_13 = 13 'r SVP_14 = 14 'l SVP_15 = 15 's z SVP_16 = 16 'sh ch jh zh SVP_17 = 17 'th dh SVP_18 = 18 'f v SVP_19 = 19 'd t n SVP_20 = 20 'k g ng SVP_21 = 21 'p b mEnd EnumElements

SVP_0

The viseme representing silence.

SVP_1

The viseme representing ae, ax, and ah.

SVP_2

The viseme representing aa.

SVP_3

The viseme representing ao.

SVP_4

The viseme representing ey, eh, and uh.

SVP_5

The viseme representing er.

SVP_6

The viseme representing y, iy, ih, and ix.

SVP_7

The viseme representing w and uw.

SVP_8

The viseme representing ow.

SVP_9

The viseme representing aw.

SVP_10

The viseme representing oy.

SVP_11

The viseme representing ay.

SVP_12

The viseme representing h.

SVP_13

The viseme representing r.

SVP_14

The viseme representing l.

SVP_15

The viseme representing s and z.

SVP_16

The viseme representing sh, ch, jh, and zh.

SVP_17

The viseme representing th and dh.

SVP_18

The viseme representing f and v.

SVP_19

The viseme representing d, t and n.

SVP_20

The viseme representing k, g and ng.

SVP_21

The viseme representing p, b and m.

http://www.gamasutra.com/view/feature/3179/read_my_lips_facial_animation_.php?page=2

"Visemes

1. [p, b, m] - Closed lips.

2. [w] & [boot] - Pursed lips.

3. [r*] & [book] - Rounded open lips with corner of lips slightly puckered. If you look at Chart 1, [r] is made in the same place in the mouth as the sounds of #7 below. One of the attributes not denoted in the chart is lip rounding. If [r] is at the beginning of a word, then it fits here. Try saying “right” vs. “car.”

4. [v] & [f ] - Lower lip drawn up to upper teeth.

5. [thy] & [thigh] - Tongue between teeth, no gaps on sides.

6. [l] - Tip of tongue behind open teeth, gaps on sides.

7. [d,t,z,s,r*,n] - Relaxed mouth with mostly closed teeth with pinkness of tongue behind teeth (tip of tongue on ridge behind upper teeth).

8. [vision, shy, jive, chime] Slightly open mouth with mostly closed teeth and corners of lips slightly tightened.

9. [y, g, k, hang, uh-oh] - Slightly open mouth with mostly closed teeth.

10. [beat, bit] - Wide, slightly open mouth.

11. [bait, bet, but] - Neutral mouth with slightly parted teeth and slightly dropped jaw.

12. [boat] - very round lips, slight dropped jaw.

13. [bat, bought] - open mouth with very dropped jaw."

Emphasis and exaggeration are also very important in animation. You may wish to punch up a sound by the use of a viseme to punctuate the animation. This emphasis along with the addition of secondary animation to express emotion is key to a believable sequence. In addition to these viseme frames, you will want to have a neutral frame that you can use for pauses. In fast speech, you may not want to add the neutral frame between all words, but in general it gives good visual cues to sentence boundaries

The list of phonemes above corresponds with the Disney visemes.

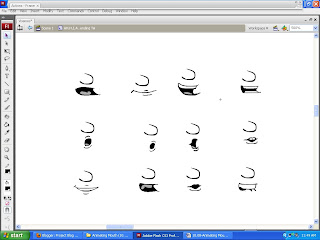

I have designed Disney equivalent of visemes:

Corresponding with phonemes:

(From left to right across)

1)AH,H,I,A..ending TA, 2)BA,B,MMM,P, 3)CGJKSZTS, 4) E, 5) O, 6) OOO, 7) Q U, 8) R, 9) silence, 10) start-D,T,THA,LA.11) ending-L,N, START-WHA,Y & 12) vee,f,fah

- I had to ensure that all elements of the face are identical in each viseme.This will aid animation later.

- I designed the nose first and then the lips around that.

- I decided to omit the eyes from this part of the animation as they don't particularly add to the mouth shape guide.

- Hopefully the character will be totally interactive (ie: user can choose appearance), and so the design of the eyes could be chosen from a range of pre-designed eyes.

- With these visemes prepared for animation there's only one thing to do... Get animating! Synch up to sound, and deal with sound approproiately (ie:error catching, ensuring it only plays once fully loaded, use of channels etc).

Extra Reading:

Prototyping and Transforming Visemes for Animated Speech

Bernard Tiddeman and David Perrett

School of Computer Science and School of Psychology,

University of St Andrews, Fife KY16 9JU.

This will be handy for the report.

ReplyDeleteA